Outstanding, three contestants arrived within 10 minutes of each other!

This is the blog of the Pegasus Team in DARPA's Grand Challenge (2005-2007). Our Vehicle's name is Pegasus Bridge 1.

# This code is released

# under the General

# Public Licence (GPL),

# DISCLAIMER : if you do not know

# what you are doing

# do not use this program on a

# motorized vehicle or any machinery

# for that matter. Even if you know

# what you are doing, we absolutely

# cannot be held responsible for your

# use of this program under ANY circumstances.

# The program is

# released for programming education

# purposes only i.e. reading

# the code will allow students

# to understand the flow of the program.

# We do not advocate nor condone

# the use of this program in

# any robotic systems. If you are thinking

# about including this software

# in a machinery of any sort, please don't do it.

#

# This program was written by

# Pedro Davalos, Shravan Shashikant,

# Tejas Shah, Ramon Rivera and Igor Carron

#

# Light version of the Drive-by-wire program

# for Pegasus Bridge 1 - DARPA GC 2005

#

import sys, traceback, os.path, string

import thread, time, serial, parallel

import re

import struct

import msvcrt

import pyTTS

rootSaveDir = "C:\\PegasusBridgeOne\\"

gpsSaveDir = os.path.join(rootSaveDir,"gps")

ctlSaveDir = os.path.join(rootSaveDir,"control")

stopProcessingFlag = 0

printMessagesFlag = 1

p = None

tts = pyTTS.pyTTS()

gps_data = list()

imu_data = list()

def runnow():

print "Drive-by-wire program running now"

#launch controller thread

t = thread.start_new_thread(control_sensors,())

#launch gps sensor thread

t1 = thread.start_new_thread(read_gps_data,())

def control_sensors():

global stopProcessingFlag

global ctlSaveDir

global p

printMessage("Control thread has started")

p=parallel.Parallel()

p.ctrlReg = 9 #x&y axis

p.setDataStrobe(0)

sleep = .01

while (stopProcessingFlag != 1):

key = msvcrt.getch()

length = len(key)

if length != 0:

if key == " ": # check for quit event

stopProcessingFlag = 1

else:

if key == '\x00' or key == '\xe0':

key = msvcrt.getch()

print ord(key)

strsteps = 5

accelsteps=1

if key == 'H':

move_fwd(accelsteps, sleep)

if key == 'M':

move_rte(strsteps, sleep)

if key == 'K':

move_lft(strsteps, sleep)

if key == 'P':

move_bak(accelsteps, sleep)

print "shutting down"

ctl_ofile.close()

def read_gps_data():

global gpsSaveDir

global stopProcessingFlag

global gps_data

printMessage("GPS thread starting.")

#Open Serial Port with baud 4800 on port 5 (Com6)

ser = None

try:

gps_ofile = open(''.join( \

[gpsSaveDir,'\\',time.strftime**

**("%Y%m%d--%H-%M-%S-"),**

** "-gps_output.txt"]),"w")

ser = serial.Serial(5, 38400, timeout=2)

while (stopProcessingFlag != 1):

resultx = ser.readline()

# if re.search('GPRMC|GPGGA|GPZDA', resultx):

splt = re.compile(',')

ary = splt.split(resultx)

gps_ofile.write(str(get_time() + ', '+resultx))

gps_data.append([get_time(), resultx])

if (len(gps_data)> 100): gps_data.pop(0)

ser.close()

gps_ofile.close()

except:

write_errorlog("GPS Read method " )

if ser != None:

ser.close()

def write_errorlog(optional_msg=None,whichlog='master'):

if optional_msg!=None:

writelog(whichlog,"\nERROR : "+ optional_msg)

type_of_error = str(sys.exc_info()[0])

value_of_error = str(sys.exc_info()[1])

tb = traceback.extract_tb(sys.exc_info()[2])

tb_str = ''

# error printing code goes here

def get_time(format=0):

stamp = '%1.6f' % (time.clock())

if format == 0:

return time.strftime('%m%d%Y %H:%M:%S-', \

time.localtime(time.time())) +str(stamp)

elif format == 1:

return time.strftime('%m%d%Y_%H%M', \

time.localtime(time.time()))

def if_none_empty_char(arg):

if arg == None:

return ''

else:

return str(arg)

def writelog(whichlog,message):

global rootSaveDir

logfile = open(os.path.join(rootSaveDir, \

whichlog+".log"),'a')

logfile.write(message)

logfile.close()

printMessage(message)

def printMessage(message):

global printMessagesFlag

if printMessagesFlag == 1:

print message

def move_fwd(steps, sleep):

global p

for i in range (0,steps):

p.setData(32)

p.setData(0)

time.sleep(sleep)

def move_bak(steps, sleep):

global p

for i in range (0,steps):

p.setData(48)

p.setData(0)

time.sleep(sleep)

def move_lft(steps, sleep):

global p

for i in range (0,steps):

p.setData(12)

p.setData(0)

time.sleep(sleep)

def move_rte(steps, sleep):

global p

for i in range (0,steps):

p.setData(8)

p.setData(0)

time.sleep(sleep)

print 'Hello'

tts.Speak("Hello, I am Starting the control**

**Program for Pegasus Bridge One")

time.sleep(4)

runnow()

while (stopProcessingFlag != 1):

time.sleep(5)

print 'Bye now'

tts.Speak("Destination reached, Control Program**

**Aborting, Good Bye, have a nice day")

time.sleep(4)

The same thing will happen if you're running a startup, of course. If you do everything the way the average startup does it, you should expect average performance. The problem here is, average performance means that you'll go out of business. The survival rate for startups is way less than fifty percent. So if you're running a startup, you had better be doing something odd. If not, you're in trouble.

Eventually our interest converges on fusioning data from inertial and vision sensors in order to provide navigability as well as obstacle avoidance capability as humans can do with their eyes and vestibular system. This is a subject that has only been recently looked into in the academic community even though much has been done of Structure From Motion. A related interest is how gaze following tells us something about learning.

Besides GPS, IMU and visual data, we are using audio as well. The driving behavior of humans is generally related to the type of noise they hear when driving. We are also looking at fusioning this type of data with the video and the IMU. If you have driven in the Mojave desert, you know that there are stanger sounds than the ones you've heard on a highway. They tell you something about the interference between one of your sensors (the tires) and the landscape. This is by no means data to be thrown out since your cameras tells you see if the same landscape ahead of the vehicle is to be expected.

Making Data Freely Available and Enabling Potential Collaborative Work with People We presently Do Not Know

One of the reasons we put some data on our web site that features GPS, IMU (acceleration, heading and rotation rate) is because we want other people who do not have the means nor the interest to take a stab at the problem of setting up a system that can gather data to still be able to use real world data for some initial finding. More data will be coming within the coming months and we are looking for some type of academic/research institution to host these data. We have collected 40 gigabytes of data so far but with a monthly bandwidth allowance of 42 GB per month. We do not expect to survive any interest from the robotics community past the first person who downloads all of them (we have had more than 30 people who downloaded the larger file after making an announcement on robots.net a month and half ago.)

Teaming with other researchers that are specifically in this area is of interest but this is not our main strategy. The odd part of this strategy is the statement that data do not matter as much as making sense of them. It doesn't matter if you have driven 100 miles and gathered data with your vehicle if you have not done the most important: postprocessing.

Using the Right Programming Language

We are currently using the Python programming language. Why ? for several reasons,

Using the Right Classification Approach

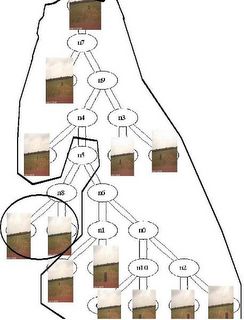

One of our approach to make classification of images for the purpose of road following as well as obstacle avoidance is based on defining some type of distance between drivable and non-drivable scenes. There are many different projection techniques available in the literature. Let us take the example of the "zipping-classifier" mechanism and how we are using it for obstacle avoidance. This technique uses a compressor like Winzip or 7-zip to produce some type of distance between files (here images.) A very nice description of it can be read here. The whole theory is based on Minimum Description Length. An example of a naive classification made on pictures we took at the site visit in San Antonio are shown below. In the interest of reproducibility, we used CompLearn to produce this tree. (Rudi Cilibrasi is the developer CompLearn.) The tree clearly allows the segmentation between open areas and the ones with trash cans. We did no processing to the pictures. Obviously, our approach is much more specific in defining this distance between a "good" road and a not-so-good one.

Let's hope we are doing something odd enough.