The same thing will happen if you're running a startup, of course. If you do everything the way the average startup does it, you should expect average performance. The problem here is, average performance means that you'll go out of business. The survival rate for startups is way less than fifty percent. So if you're running a startup, you had better be doing something odd. If not, you're in trouble.

While we are not going to talk about eveything on how we approach the vehicle for the Grand Challenge, we can describe how we are doing something odd:

Using the Sensors We Understand

Our approach to the Race is to have no range sensor like Lidars. Why ?

- first, because everybody seems to be doing it and it does not make sense to differentiate ourselves on how much money we can spend on a specific sensor, we know we can easily be outspent,

- second, because we do not see a good way for the use of the technology developed for this project if we are not making more sense of data that are generally gathered by humans to make decisions. In the end, there are far more possibilities to use research based on sensors for which humans are accustomed to, than if one were to make sense of data from specifically crafted sensors like Lidars. There is a cost associated with devising new sensors in a new range of wavelengths, but the central issue is not having made sense of the data one already had access to. If you have been so unsuccessful at making sense of data from sensors you have been using all your life (at least most of us have had vision) why do you think you are going to be more successful with other types of sensors ? We are not saying it is easy. As the literature shows it is still a big issue in the robotics vision community but we think we have a good strategy on sensing and making sense of data.

- Third, it did not seem to help the competitors last year.

Eventually our interest converges on fusioning data from inertial and vision sensors in order to provide navigability as well as obstacle avoidance capability as humans can do with their eyes and vestibular system. This is a subject that has only been recently looked into in the academic community even though much has been done of Structure From Motion. A related interest is how gaze following tells us something about learning.

Besides GPS, IMU and visual data, we are using audio as well. The driving behavior of humans is generally related to the type of noise they hear when driving. We are also looking at fusioning this type of data with the video and the IMU. If you have driven in the Mojave desert, you know that there are stanger sounds than the ones you've heard on a highway. They tell you something about the interference between one of your sensors (the tires) and the landscape. This is by no means data to be thrown out since your cameras tells you see if the same landscape ahead of the vehicle is to be expected.

Making Data Freely Available and Enabling Potential Collaborative Work with People We presently Do Not Know

One of the reasons we put some data on our web site that features GPS, IMU (acceleration, heading and rotation rate) is because we want other people who do not have the means nor the interest to take a stab at the problem of setting up a system that can gather data to still be able to use real world data for some initial finding. More data will be coming within the coming months and we are looking for some type of academic/research institution to host these data. We have collected 40 gigabytes of data so far but with a monthly bandwidth allowance of 42 GB per month. We do not expect to survive any interest from the robotics community past the first person who downloads all of them (we have had more than 30 people who downloaded the larger file after making an announcement on robots.net a month and half ago.)

Teaming with other researchers that are specifically in this area is of interest but this is not our main strategy. The odd part of this strategy is the statement that data do not matter as much as making sense of them. It doesn't matter if you have driven 100 miles and gathered data with your vehicle if you have not done the most important: postprocessing.

Using the Right Programming Language

We are currently using the Python programming language. Why ? for several reasons,

- we do not want to optimize ahead of time the respective challenges occuring in building an autonomous vehicle, hence we are neither using C/C++, Java nor any real time operating systems for development and the final program. Most announcements on other teams web site look for Java, C/C++ programmers.

- Python is close to pseudo-code and the learning curve for newcomers into the team as well as veterans is very smooth especially coming from Matlab.

- Python is providing glue, libraries, modules for all kinds of needs expressed within the project (from linear algebra with Lapack bindings , to Image analysis, to plots to voice activation with PyTTS, to sound recording and others)

- Thanks to Moore's law, Python is fast enough on Windows but we do not feel we would be losing too much sleep having to switch to a different OS or having to create modules that are specifically faster in C. Using the Windows OS helps in debugging the programs since our team is distributed geographically and everyone generally use Windows on their laptops.

Using the Right Classification Approach

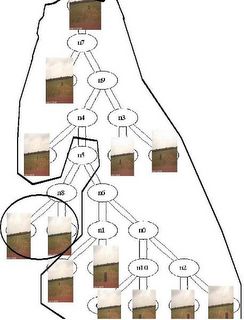

One of our approach to make classification of images for the purpose of road following as well as obstacle avoidance is based on defining some type of distance between drivable and non-drivable scenes. There are many different projection techniques available in the literature. Let us take the example of the "zipping-classifier" mechanism and how we are using it for obstacle avoidance. This technique uses a compressor like Winzip or 7-zip to produce some type of distance between files (here images.) A very nice description of it can be read here. The whole theory is based on Minimum Description Length. An example of a naive classification made on pictures we took at the site visit in San Antonio are shown below. In the interest of reproducibility, we used CompLearn to produce this tree. (Rudi Cilibrasi is the developer CompLearn.) The tree clearly allows the segmentation between open areas and the ones with trash cans. We did no processing to the pictures. Obviously, our approach is much more specific in defining this distance between a "good" road and a not-so-good one.

classification of trash can obstacle

Using the API for Google (images), we can build libraries of relevant information for road driving experience purposes and compute their "distance" to the road driven by our vehicle.

Let's hope we are doing something odd enough.